How dangerous is AI?

I attended two talks by AI greats in the last few weeks: one by Geoffrey Hinton last month, and one by Yann LeCun at an event in New York yesterday.

They represent two different visions when it comes to the potential dangers of AI. Hinton sees us being at a crossroads where we need to tread very carefully lest we end up destroying humanity. LeCun on the other hand tells us that for all its hype, today's AI has less intelligence than a cat, and concerns about the power of AI are grossly exaggerated. So who's right? In fact I agree with both in some regard.

I agree with LeCun that the capabilities of AI are still very narrow and limited when compared to humans. But, like Hinton, I do worry. A lot.

What I worry about is not what AI can do, but rather how we collectively react to it. The way we enthusiastically adopt it in our lives, without much thought.

Even with the limited capabilities of AI today, and what will likely be possible in the next couple of years, I think we can easily end up ruining our society. I believe we underestimate the risk of flooding ourselves with mediocre content that can be created at near zero cost. We underestimate how AI, along with other factors, will necessitate a complete rethinking of our education system. The possibility to refine everything with AI will make us less accepting of the originality but also imperfection of human created content. Our protocols for communicating with one another will change, often opting for AI as an intermediary. And, as we become increasingly reliant on AI, it will affect our autonomy.

While I do not fear human extinction, I am concerned about the devaluation of our culture and of humans in general.

What does it mean to be Human?

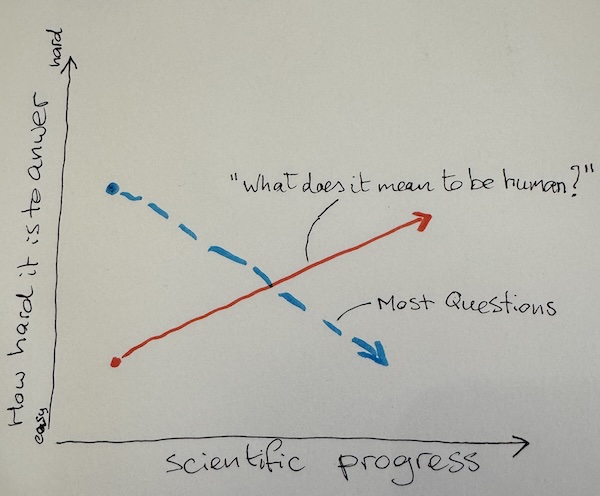

Most questions get easier over time. How the sun and the planets move around the earth (or rather us around them) was a mystery for some time, but telescopes and math helped come up with good models. It took some time to figure out the cause of bubonic plague, but medical science got the better of it and we managed to mostly eradicate the disease.

I have been exploring the question what it means to be human and I realized that it is a rare question in that it is getting harder to answer the more technological and scientific progress we make.

A popular answer used to be that "sophisticated use of language" was what distinguished us from plants and animals and Tamagotchis and robots. But the latest LLMs are almost as good, and sometimes better at using language(s) than humans.

CRISPR/Cas9 has the potential to confuse our ideas about the nature of humans even more. We like to think that each one of us is unique, but cloning may make that untrue.

Virtual Reality makes us spend time with, get attached to, perhaps even love entities that don't exist, at least not physically.

And AI effortlessly takes over many tasks that humans have always considered their particular contribution to the world, their source of fulfillment.

I cannot even imagine what people 50 or 100 years from now will think it means to be Human. Perhaps it will have become a moot question at that point.